Model Serving: A Multi-Layered Landscape

Machine learning (ML) models are becoming much larger and data-intensive, requiring more expensive hardware and infrastructure to train and run. As a result, serving models through the cloud has become a must for large scale adoption and use of cutting-edge ML applications.

Model serving is the process of hosting machine learning models on cloud infrastructure and making their functions available via an API so users can incorporate them into their own applications. Model serving is built on top of several layers, each involving varying levels of abstraction and providing trade-offs between complexity, flexibility, and cost. Individuals and companies can deploy their models on the cloud by operating at any of these levels, depending on their internal resources and requirements. While this diversity of options can accommodate varying use-cases, it also contributes to model serving being a complex ecosystem of tools and solutions, with partially overlapping services.

In this blog post, we’ll dive into the different layers making-up the model serving landscape, contrasting the considerations involved within each layer. By the end of this post, you should have a better understanding of the model serving components, and hopefully which layer is best for your use-case, if you intend to deploy models on the cloud!

Why a Deployment Stack?

Before diving into the model serving layers, let’s take a moment to appreciate why you need a deployment stack in the first place. Deploying machine learning on the cloud typically begins with wrapping the model code in a RESTful API library like Flask or FastAPI and hosting it on a server. While suitable for smaller projects or prototypes, this approach encounters challenges as the ML application grows.

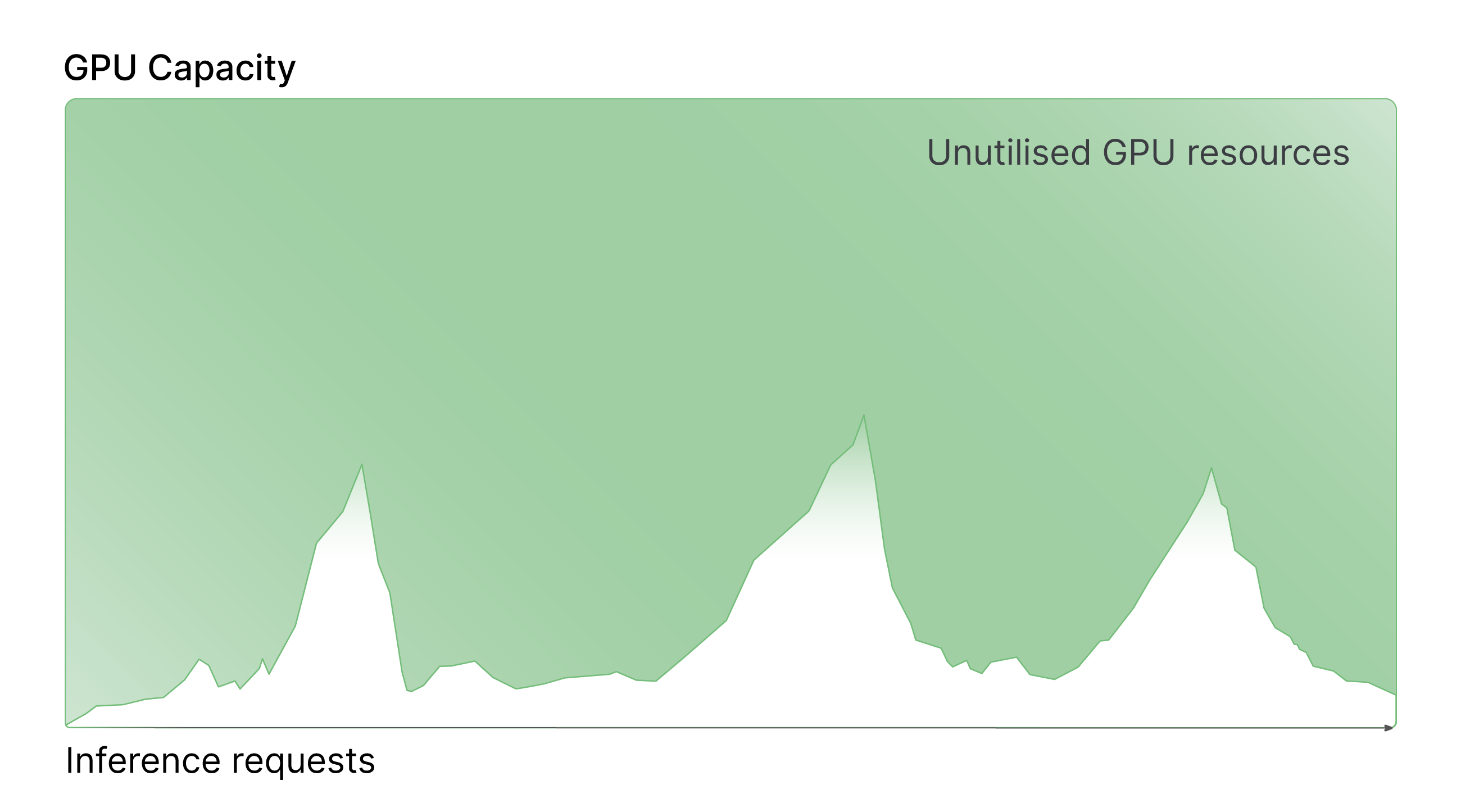

Consider an ML-based application that tries to create customer segmentations based on data collected from users playing on a gaming console. While most user traffic typically takes place later in the day when consumers get back from work / school, different time zones make GPU provisioning complex as requests fluctuate throughout the day. One option to deal with this case could be to constantly provide more than ample resources to cover the estimated level of activity at all times, to guarantee that all requests will be met. While this technically works, it’s a very inefficient solution since you end up incurring unnecessary costs when the servers are active but no activity takes place, which is most of the time in this case.

Another approach to handle this scenario is to be conservative with the compute resource provisioned. While it can help reduce cloud costs, it also comes with the inherent risk of under-serving if requests are high. This can potentially cause latency and / or service outages which can be detrimental from a user-adoption perspective.

Finally, a potential third approach involves dynamically adjusting the allocation of GPU resources to guarantee efficient utilisation, ensuring the application remains scalable as the user-demand grows and workloads fluctuate across time intervals. The issue with this approach is the operational complexity it involves which may be prohibitive to companies lacking the necessary internal resources and expertise. On the other hand, dynamic resource provisioning can be handled by dedicated platforms that can be used to offload the complex infrastructure management involved.

Each platform provides various sets of services that cater to different kinds of use cases. Consequently, making an educated choice when selecting the serving solution to adopt is paramount. As we’ve briefly touched upon, these solutions typically involve different scales of complexity that correlate with various levels of abstractions, which we’ll dive into in the following sections.

An Abstraction Spectrum

ML model deployment spans from low-level, do-it-yourself options to high-level, fully-managed solutions. At one end of the spectrum,there are services that abstract away most of the complexity. You simply upload your model and interact with it through API calls. These services handle infrastructure provisioning, scaling, and maintenance, allowing you to focus on your model and application logic.

At the other end of the spectrum, there are services that provide bare-metal access to hardware, essentially handing you the keys. With this level of control, you are responsible for developing the entire software stack, configuring infrastructure, and managing every detail of your deployment.

The services in-between offer varying levels of abstraction, striking a balance between control, convenience and cost. They might provide pre-configured machine learning environments, containerization support, or specialised tools to facilitate different aspects of model deployment.

The level of abstraction for a service has a direct impact on the flexibility and ease of development, as well as the potential for lock-in, and running costs. While it might be tempting to opt for services at the high-abstraction end of the spectrum, offering the quickest and simplest path to deploy a model, there is a balance to be strook in terms of flexibility, complexity, and costs, at any layer of the stack. Generally:

- Lower abstraction services grant you the highest degree of flexibility and cost control since you can manage all of the parameters involved when deploying a model. Choosing lower abstraction tools is generally best if you want to customise your deployment architecture to fulfil your needs, but this also typically comes at the cost of more time and effort spent building and managing different parts of the stack instead of developing your model. Managing a bigger portion of the deployment yourself also means having more difficulty migrating to similar services since you’ll need to re-adapt larger chunks of the pipeline to fit the target service’s ecosystem.

- Higher abstraction services conversely involve lower degrees of lock-in. Because higher abstraction layers provide more hands-off deployment management, you typically invest less time on custom code, and therefore a smaller portion of the pipeline needs to be adapted when you want to migrate between platforms. Choosing higher abstraction tools is generally best if you want to offload more of the deployment management, and instead focus on model development. But this comes at the expense of higher deployment costs, especially as the application scales in usage. This is because these services are typically offered at a premium due to the convenience created, and they don’t provide as much flexibility to adjust deployment parameters to optimise your own cost structure.

Both low and high-abstraction approaches cater to different user personas but there are also nuances between the various levels of the spectrum. In the following section, we’ll dive deeper into each layer of the stack to understand what they entail.

One Stack, Multiple Layers

As we’ve discussed above, the ML model deployment stack is made up of multiple layers ranked by their levels of abstraction. These levels include cloud platforms, orchestration, serverless, model endpoints, and model binaries.

Model Endpoints

The top-most of the stack provides the simplest deployment process. Here, you can save your model as a binary (a pre-compiled file that contains a machine learning or deep learning model) and easily upload it to the endpoint provider of your choice with just a few clicks. A model endpoint refers to a service that allows users to access a machine learning model through an API. The endpoint provides a way for users to make model inferences using the provider’s resources.

Depending on your needs, some endpoint platforms may be more suitable if you still want to keep some degree of control on how the endpoint is served. This typically involves using third party API building libraries like Flask or FastAPI that facilitate the building process. Endpoint providers include: : Amazon SageMaker, Anyscale, Azure ML Platform, Baseten, Cerebrium, Lepton AI, Mystic, Paperspace, Replicate, Valohai, Vertex AI, Beam, LaunchFlow, Modal and Octo AI.

Average pay-per-compute pricing is 0.025$/hour (assuming 10 calls in an hour) but pricing greatly varies between models and it’s often difficult to get a clear estimate on the total cost incurred at the end. To mitigate this, some platforms provide fixed-fee plans ranging from 40$ to 100$ per month, but most only propose to set-up custom quotes.

Considerations: Managing an application at this level involves:

- Pipeline Adaptation: You need to rewrite code wrappers when migrating to another provider given that different providers typically use different deployment tools, for example Replicate makes use of Cog to build and deploy models while BaseTen uses Truss to package, deploy and invoke models. Even if you have not extensively customised your code for a particular provider initially, you may still be restricted in your choice of frameworks and tools depending on what the provider supports.

- Technical Expertise: Developing a fully operational model serving application, typically utilising tools like vLLM, flask, or FastAPI, is a set of skills required to properly make use of services that provide customization options. Some platforms also give you the flexibility to deploy from a Docker container so being able to set up the environment and Docker file can be a plus. Individuals and companies lacking these expertise may not fully take advantage of such resources.

Serverless

A serverless service is a cloud service that allows you to easily and quickly deploy your ML models for inference without the need to manage any underlying infrastructure. With serverless deployment, you simply upload your trained model and specify the resources it needs to run. The service automatically provisions the necessary compute resources and manages the scaling and availability of your model. This means that you don’t need to worry about provisioning and managing servers, scaling your model to meet demand, or ensuring that it is available to make predictions 24/7. Some services are specialised in deep learning and offer relevant tools bundled with their core offering.

At the serverless layer, your primary responsibility involves high level management of the system, either through code or via a docker container. Everything related to setting-up the operating software, virtualization, storage or networking is managed for you, considerably simplifying many aspects of the deployment process compared to building infrastructure from the ground up. Dedicated serverless platforms include Banana, CoreWeave, Inferless or Runpod.

Average pay-per-compute pricing is 1.41$/hour which is slightly costlier than bare metal offerings. Pricing also greatly varies between hardware used and whether you reserve instances for a set period of time.

Considerations: Managing an application at this level involves:

- Technical Expertise: Even if serveless platforms automatically adjust resource allocation with user requests, you still need to select the appropriate configuration. This requires some familiarity with writing Docker containers and using custom code wrappers from a given provider. Further, specifying the resources to manage requires understanding how the model behaves and what kind of user demand is expected.

- Pipeline Adaptation: The serverless layer is generally less prone to lock-in due to Docker's status as a universal standard for containerized applications. However, even with this level of flexibility, you may still need to adapt your configuration to meet specific requirements of the underlying cloud provider.

Managed Services

For developers that want to get partially involved in setting-up the infrastructure without needing to build everything themselves, managed services can be a good option.

Managed services refers to all bundled or stand-alone services provided by cloud platforms to facilitate setting-up a deployment infrastructure. Such services include dedicated virtual machines (VM) that come with pre-installed operating systems and can be used to run applications and services, networking services that allow users to easily set up and manage virtual networks and other network resources, and monitoring services that allow users to monitor the performance and health of their applications and infrastructure

At this level you need to design your system and create the connections between these separate services in a way that fits your design, requirements, and intended use-cases, but you don’t need to build each service yourself as they are directly offered pre-packaged. Platforms offering managed services include AWS, Microsoft Azure, GCP, IBM Cloud.

Depending on the service, pricing can range from a few dollars to hundreds of dollars a month. Most vendors provide utilities to estimate the cost including all selected services but the actual total cost can diverge from these estimates based on ending use.

Considerations: Managing an application at this level involves:

- Technical Expertise: Being able to make the appropriate design choices requires broad technical expertise in systems design. Familiarity with each of the various building-blocks is also needed to know which service to use and how to integrate it within the overall deployment pipeline.

- Range of Services: Different platforms include different services with some offering unique add-ons. Because services from one provider can’t be ported to another, operating at this level often involves making trade-offs between partially overlapping ranges of services when selecting a specific vendor.

Bare Metal

At this level, you would need to set up both machines and network infrastructure yourself. A bare metal machine refers to a physical server that is dedicated to a single customer and is not shared with other customers, and is often for applications that require high performance, low latency, or large amounts of memory or storage. Bare metal providers typically offer a range of server configurations, including different processor types, memory sizes, and storage options. Customers can typically choose the specific configuration that best meets their needs, and can then install and run their own operating systems and applications on the server.

In this layer, you are in control of both VM and network configurations, and depending on the provider, you may get a pre-defined operating system image with the tools you want as well as SSH access to the machine. There are a large panel of options providing GPU-powered infrastructure, including CrusoeCloud, FluidStack, Genesis Cloud , Google Cloud GPUs, JarvisLabs.ai, Lambda Labs, Latitude.sh, and Shadeform.

Average pay-per-compute pricing is 1.30$/hour. Pricing also greatly varies between hardware used and whether you reserve instances for a set period of time.

Considerations: Managing an application at this level involves:

- Technical Expertise: Because you operate at the bottom-most layer, this involves the highest level of complexity across the different layers. High operational burden involves complex orchestration, maintenance and scalability challenges, intricate cost management, and a need for specialised technical expertise, all contributing to the demanding responsibilities of managing machine configurations, network setups, and consistent infrastructure performance.

- Significant Lock-In: Transferring an infrastructure setup to a different cloud provider may present hurdles. In addition, migration between providers also typically entail adapting scripts and managing dynamic provisioning through specific vendor APIs. This process adds complexity to transitions, limiting movement between different cloud environments.

Conclusion

The spectrum of services in cloud ML model deployment offers a range of choices, from full control and flexibility to vendor-locked convenience. Your choice should align with your project's requirements, timeline, and your willingness to manage infrastructure. Understanding the technical relationships and dependencies between these layers is thus crucial for successful model deployment. We hope this post helped you understand the intricacies involved at each level and will provide a useful resource when drafting your cloud deployment strategy!

Wish Your LLM Deployment Was

Faster, Cheaper and Simpler?

Use the Unify API to send your prompts to the best LLM endpoints and get your LLM applications flying