Introducing the Unify LLM Hub

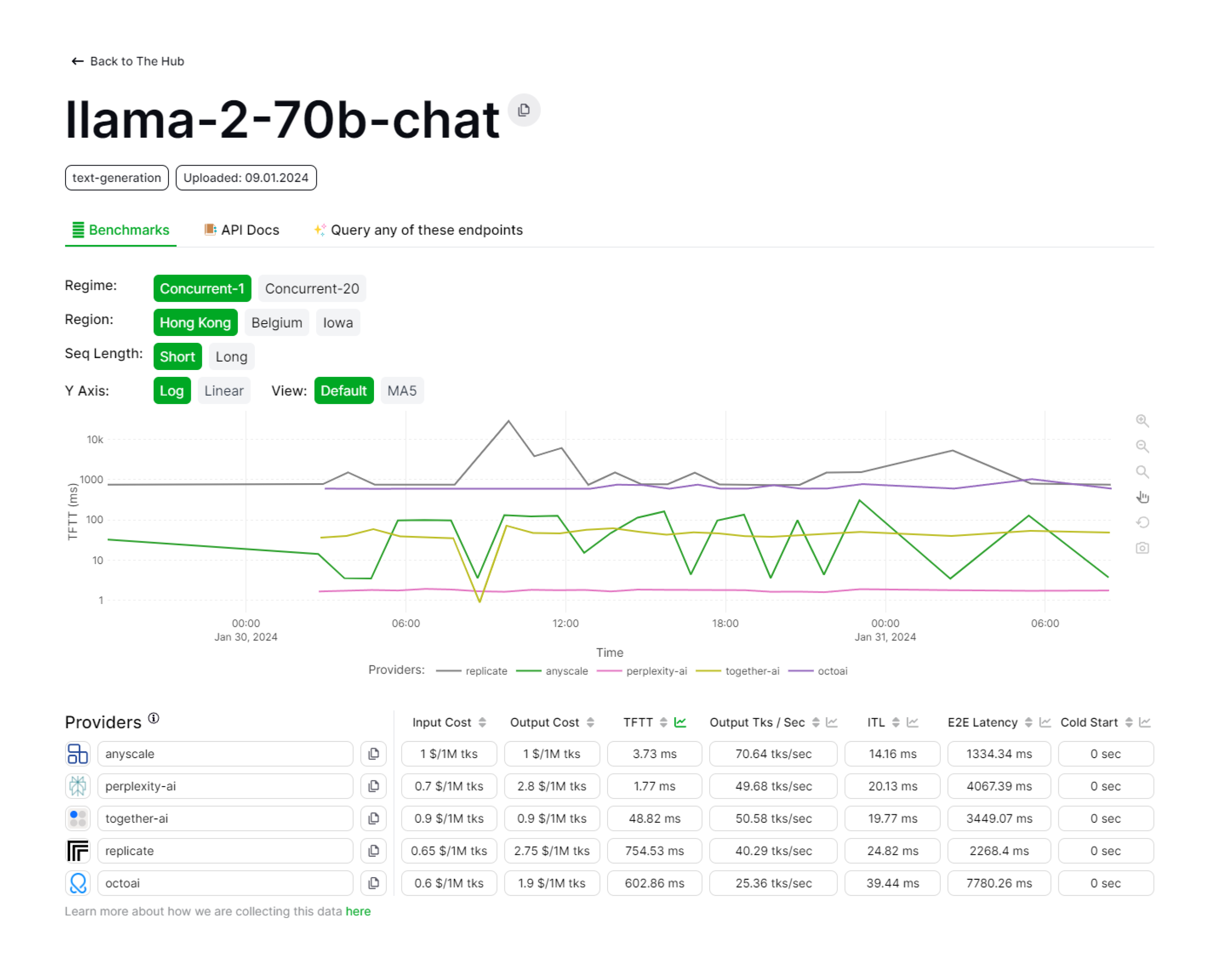

We’re very excited to announce The Unify LLM Hub: a collection of LLM endpoints, with live runtime benchmarks all plotted across time 📈

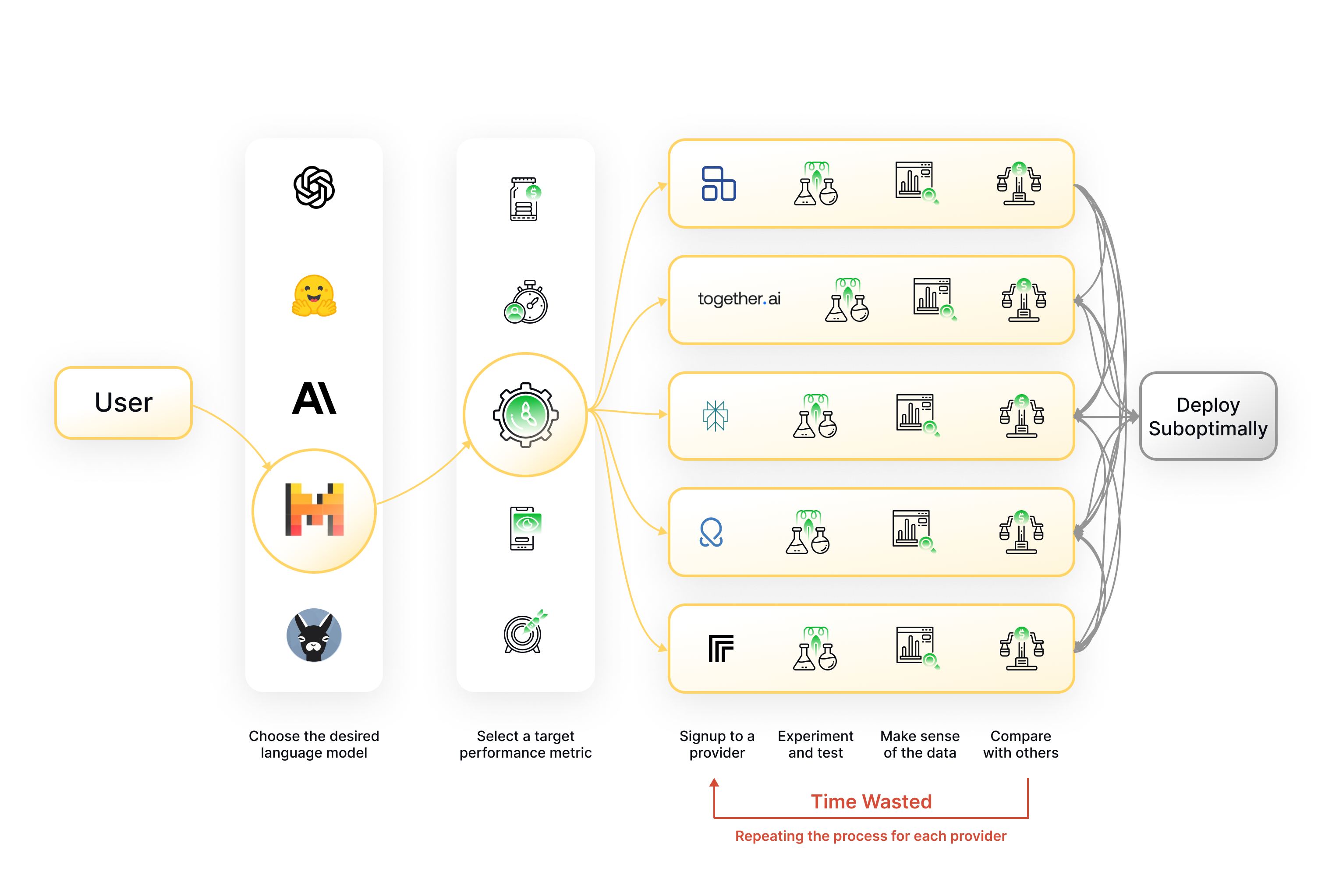

Knowing which LLM to use is very complex 🤖, and even after deciding which model to use, it’s equally complex to choose the right provider.

We rigorously benchmark the runtime performance of each provider, making it much simpler to choose the right provider for any given application. Our unified API then makes it very easy to test and deploy the chosen endpoints in production, without needing to create several accounts 🔑

We test across different regions 🌏 (Asia, US, Europe), with varied concurrency 🔀 and sequence length 🔡. By plotting across time, our dashboard highlights the stability and variability of the different endpoints, and their ongoing evolution across API updates and system changes. This is essential in order to make informed decisions about the best provider to use, as we explain in our post: Static LLM Benchmarks Are Not Enough.

Before The Hub, choosing which LLM provider to use looked like this:

Now, with all LLMs and all providers in one place, with rigorous benchmarks, it looks like this:

Our Hub is a work in progress ⚒️, and we will be releasing new features every week 🚀

We’re very excited to see what the community does with it! 😊

Wish Your LLM Deployment Was

Faster, Cheaper and Simpler?

Use the Unify API to send your prompts to the best LLM endpoints and get your LLM applications flying